What to do about a global info-and-disinfo pipeline, and who can do it?

Mark Zuckerberg’s original Facebook profile. (Photo by Niall Kennedy/ flickr CC 2.0)

Beginning in 2004, Mark Zuckerberg and his companions made a historic contribution to the annals of alchemy: They converted the lust for human contact into gold. Facebook’s current net worth is more than $500 billion, with Zuckerberg’s own share tallied at $74.2 billion, which makes him something like the fifth-wealthiest person in the world.

What can be said about Facebook can also more or less be said of Google, Twitter, YouTube and other internet platforms, but here I’ll confine myself mainly to Facebook. What a business model! Whenever their 2-billion-and-counting users click, the company (a) sells their attention to advertisers, and (b) rakes in data, which it transmutes into information that it uses to optimize the deal it offers its advertisers. Facebook is the grandest, most seductive, farthest-flung, most profitable attention-getting machine ever. Meanwhile, according to a post-election BuzzFeed analysis by Craig Silverman, who popularized the term “fake news”:

In the final three months of the US presidential campaign, the top-performing fake election news stories on Facebook generated more engagement [industry jargon for shares, reactions and comments] than the top stories from major news outlets such as The New York Times, Washington Post, Huffington Post, NBC News and others.

What has to be faced by those aghast at the prevalence of online disinformation is that it follows directly from the social-media business model. Ease of disinformation — so far, at least — is a feature, not a bug.

Zuckerberg presents himself (and has often been lionized as) a Promethean bringer of benefits to all humanity. He is always on the side of the information angels. He does not present himself as an immoralist, like latter-day Nazi-turned-American-missile-scientist Wernher von Braun, as channeled by Tom Lehrer in this memorable lyric:

Don’t say that he’s hypocritical

Say rather that he’s apolitical

“Once the rockets are up, who cares where they come down?

That’s not my department!” says Wernher von Braun.

To the contrary, Zuckerberg is a moralist. He does not affect to be apolitical. He wants his platform to be a “force for good in democracy.” He wants to promote voting. He wants to “give all people a voice.” These are political values. Which is a fine thing. Whenever you hear powerful people purport to be apolitical, check your wallet.

There’s no evidence that in 2004, when Mark Zuckerberg and his Harvard computer-savvy buddies devised their amazing apparatus and wrote the code for it, that they intended to expose Egyptian police torture and thereby mobilize Egyptians to overthrow Hosni Mubarak, or to help white supremacists distribute their bile, or to throw open the gates through which Russians could cheaply circulate disinformation about American politics. They were ingenious technicians who wholeheartedly shared the modern prejudice that more communication means more good for the world — more connection, more community, more knowledge, more, more, more. They were not the only techno-entrepreneurs who figured out how to keep their customers coming back for more, but they were among the most astute. These engineer-entrepreneurs devised intricate means toward a time-honored end, pursuing the standard modern media strategy: package the attention of viewers and readers into commodities that somebody pays for. Their product was our attention.

Sounds like a nifty win-win. The user gets (and relays) information, and the proprietor, for supplying the service, gets rich. Information is good, so the more of it, the better. In Zuckerberg’s words, the goal was, and remains, “a community for all people.” What could go wrong?

So it’s startling to see the cover of this week’s Economist, a publication not hitherto noted for hostility to global interconnection under the auspices of international capital. The magazine’s cover graphic shows the Facebook “f” being wielded as a smoking gun. The cover story asks, “Do social media threaten democracy,” and proceeds to cite numbers that have become fairly familiar now that American politicians are sounding alarms:

Facebook acknowledged that before and after last year’s American election, between January 2015 and August this year, 146 million users may have seen Russian misinformation on its platform. Google’s YouTube admitted to 1,108 Russian-linked videos and Twitter to 36,746 accounts. Far from bringing enlightenment, social media have been spreading poison.

Facebook’s chief response to increasingly vigorous criticism is an engineer’s rationalization: that it is a technological thing — a platform, not a medium. You may call that fatuously naïve. You may recognize it as a commonplace instance of the Silicon Valley belief that if you figure out a way to please people, you are entitled to make tons of money without much attention to potentially or actually destructive social consequences. It’s reminiscent of what Thomas Edison would have said if asked if he intended to bring about the electric chair, Las Vegas shining in the desert, or, for that matter, the internet; or what Johannes Gutenberg have said if asked if he realized he was going to make possible the Communist Manifesto and Mein Kampf. Probably something like: We’re in the tech business. And: None of your business. Or even: We may spread poison, sure, but also candy.

This candy not only tastes good, Zuckerberg believes, but it’s nutritious. Thus on Facebook — his preferred platform, no surprise — Mark Zuckerberg calls his brainchild “a platform for all ideas” and defends Facebook’s part in the 2016 election with this ringing declaration: “More people had a voice in this election than ever before.” It’s a bit like saying that Mao Zedong succeeded in assembling the biggest crowds ever seen in China, but never mind. Zuckerberg now “regrets” saying after the election that it was “crazy” to think that “misinformation on Facebook changed the outcome of the election.” But he still boasts about “our broader impact … giving people a voice to enabling candidates to communicate directly to helping millions of people vote.”

Senators as well as journalists are gnashing their teeth. Hearings are held. As always when irresponsible power outruns reasonable regulation, the first recourse of reformers is disclosure. This is, after all, the age of freedom of information (see my colleague Michael Schudson’s book, The Rise of the Right to Know: Politics and the Culture of Transparency, 1945–1975). On the top-10 list of cultural virtues, transparency has moved right up next to godliness. Accordingly, Sens. Amy Klobuchar, Mark Warner and John McCain have introduced an Honest Ads Act, requiring disclosure of the sources of funds for online political ads and (in the words of the senators’ news release) “requiring online platforms to make all reasonable efforts to ensure that foreign individuals and entities are not purchasing political advertisements in order to influence the American electorate. (A parallel bill has been introduced in the House.) And indeed, disclosure is a good thing, a place to start.

What to do? That’s the question of the hour. The midterm elections of 2018 are less than a year away.

Europeans have their own ideas, which make Facebook unhappy, though it ought not be surprising that a global medium runs into global impediments — and laws. In September the European Union told Facebook, Twitter and other social media to take down hate speech or face legal consequences. In May 2016, the companies had “promised to review a majority of hate speech flagged by users within 24 hours and to remove any illegal content.” But 17 months later, the EU’s top regulator said the promise wasn’t good enough, for “in more than 28 percent of cases, it takes more than one week for online platforms to take down illegal content.” Meanwhile, Europe has no First Amendment to impede online (or other) speech controls. Holocaust denial, to take a conspicuous example, is a crime in 16 countries. And so, consider a German law that went into effect on Oct. 1 to force Facebook and other social-media companies to conform to federal law governing the freedom of speech. According to The Atlantic:

The Netzwerkdurchsetzungsgesetz, or the “Network Enforcement Law,” colloquially referred to as the “Facebook Law,” allows the government to fine social-media platforms with more than 2 million registered users in Germany … up to 50 million euros for leaving “manifestly unlawful” posts up for more than 24 hours. Unlawful content is defined as anything that violates Germany’s Criminal Code, which bans incitement to hatred, incitement to crime, the spread of symbols belonging to unconstitutional groups and more.

As for the United Kingdom, Facebook has not responded to charges that foreign intervention through social media also tilted the Brexit vote. As Carole Cadwalladr writes in The Guardian:

No ads have been scrutinized. Nothing — even though Ben Nimmo of the Atlantic Council think tank, asked to testify before the Senate intelligence committee last week, says evidence of Russian interference online is now “incontrovertible.” He says: “It is frankly implausible to think that we weren’t targeted too.”

Then what? In First Amendment America, of course, censorship laws would never fly. Then can reform be left up to Facebook management?

Unsurprisingly, that’s what the company wants. At congressional hearings last week, their representatives said that by the end of 2018 they would double the number of employees who would inspect online content. But as New York Times reporters Mike Isaac and Daisuke Wakabayashi wrote, “in a conference call with investors, Facebook said many of the new workers are not likely to be full-time employees; the company will largely rely on third-party contractors.”

Suppose that company is serious about scrubbing their contacts of lies and defamations. It’s unlikely that temps and third-party contractors, however sage, however algorithm-equipped, can do the job. So back to the question: Beyond disclosure, which is a no-brainer, what’s to be done, and by whom?

For one thing, as the fierce Facebook critic Zeynep Tufekci notes, many on-the-ground employees are troubled by less-than-forceful actions by company owners. Why don’t wise heads in the tech world reorganize Computer Professionals for Social Responsibility (which existed through 2013)? Through recent decades, we have seen excellent organizations of this sort arise from many professional quarters: scientists — nuclear scientists in particular, lawyers, doctors, social workers and so on. Ever since 1921, when in a short book called The Engineers and the Price System the great economic historian Thorstein Veblen looked to engineers to overcome the venality of the corporations that employed them, such dreams have led a sort of subterranean, but sometimes aboveground, life.

The Economist is not interested in such radical ideas. Having sounded an alarm about the toxicity of social media, The Economist predictably — and reasonably — goes on to warn against government intrusion. Also reasonably, it allots responsibility to thoughtless consumers, though while blaming the complicit victims it might well reflect on the utter breakdown of democratic norms under the spell of Republican fraudulence and insanity:

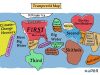

[P]olitics is not like other kinds of speech; it is dangerous to ask a handful of big firms to deem what is healthy for society. Congress wants transparency about who pays for political ads, but a lot of malign influence comes through people carelessly sharing barely credible news posts. Breaking up social-media giants might make sense in antitrust terms, but it would not help with political speech — indeed, by multiplying the number of platforms, it could make the industry harder to manage.

Not content to stop there, though, The Economist offers other remedies:

The social-media companies should adjust their sites to make clearer if a post comes from a friend or a trusted source. They could accompany the sharing of posts with reminders of the harm from misinformation. Bots are often used to amplify political messages. Twitter could disallow the worst — or mark them as such. Most powerfully, they could adapt their algorithms to put clickbait lower down the feed. Because these changes cut against a business model designed to monopolize attention, they may well have to be imposed by law or by a regulator.

But in the US, it’s time to consider more dramatic measures. Speaking of disclosure, many social scientists outside the company would like Facebook to open up more of its data — for one reason among others, to understand how their algorithms work. There are those in the company who say they would respond reasonably if reformers and researchers got specific about what data they want to see. What specifically should they ask?

Should there be, along British lines, a centrally appointed regulatory board? Since 2003, the UK has had an Office of Communications with regulatory powers. Its board is appointed by a Cabinet minister. Britain also has a press regulation apparatus for newspapers. How effective these are I cannot say. In the US, should a sort of council of elders be established in Washington, serving staggered terms, to minimize political rigging? But if so, what happens when Steve Bannon gets appointed?

Columbia law professor Tim Wu, author of The Attention Merchants: The Epic Scramble to Get Inside Our Heads, advocates converting Facebook into a public benefit or nonprofit company. The logic is clear, though for now it’s a nonstarter.

But we badly need the debate.

The Economist’s conclusion is unimpeachable:

Social media are being abused. But, with a will, society can harness them and revive that early dream of enlightenment. The stakes for liberal democracy could hardly be higher.

The notion of automatic enlightenment through clicks was, of course, a pipe dream. What’s more plausible today is a nightmare. It is no longer a decent option for a democracy — even a would-be democracy — to stand by mumbling incantations to laissez faire while the institutions of reason are shaking.